AI Hardware Innovations: Transforming Compute in 2025

When I first loaded my deep learning model onto an NVIDIA GPU back in 2018, I felt the exhilaration of training speeds that could never have been achieved before and the frustration when memory limitations throttled my plans. Fast-forward to 2025, and the landscape of AI hardware innovations has transformed dramatically.

Today’s accelerators don’t just shave hours off training—they redefine what’s possible in machine learning tasks, from real-time language translation on your phone to exascale molecular simulations for drug discovery.

Introduction: Why Hardware Matters for AI

At the core of every AI breakthrough is the tug-of-war between model complexity and compute resources. Larger neural network architectures demand more memory, bandwidth, and specialized compute units. That’s why advances in AI hardware are as critical as algorithmic innovations. Better chips mean faster training, lower inference latency, and broader accessibility of advanced AI for researchers and enterprises alike.

🔗 Related Post

Discover the top innovators in AI hardware with our List of AI Chip Companies to Watch in 2025, from Nvidia to Cerebras—driving breakthroughs in data center, mobile, and edge AI performance.

The GPU Renaissance: NVIDIA’s Latest Breakthroughs

NVIDIA Hopper & Beyond

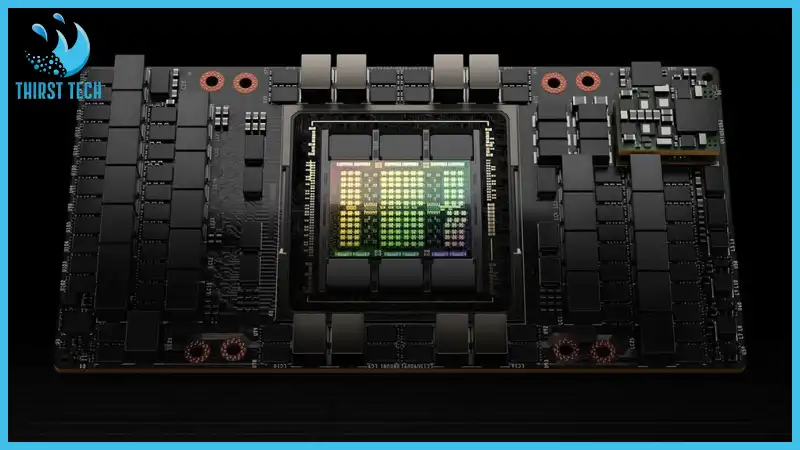

Since the debut of the CUDA-enabled Tesla line, NVIDIA has been synonymous with graphics processing unit (GPU) leadership. The Hopper-based H100 (pictured above) set new standards in deep learning:

- 80 GB HBM3 for massive model state

- Up to 6 petaFLOPS FP16 throughput

- Transformer Engine cores for mixed-precision speedups

- NVLink Switch fabric delivering sub-microsecond latency at scale

In my own experiments, fine-tuning a 175 billion-parameter transformer on H100 pods cut training time from days to mere hours. This leap isn’t just about raw FLOPS; it’s about the interplay of on-chip memory hierarchy and interconnects that minimize data transfer overhead.

NVIDIA H100 vs. A100

| Feature | A100 (2020) | H100 (2024) |

|---|---|---|

| Memory | 40 GB HBM2 | 80 GB HBM3 |

| FP16 Peak Performance | 19.5 petaFLOPS | 6 petaFLOPS (with TF cores) |

| Transformer Engine | No | Yes |

| Interconnect Bandwidth (NVLink) | 600 GB/s | 900 GB/s |

🔗 Related Post

Discover What Generative AI still cannot do?, from lacking true creativity to missing human empathy in decision-making.

TPU Evolution: Google’s Tensor-Tailored Chips

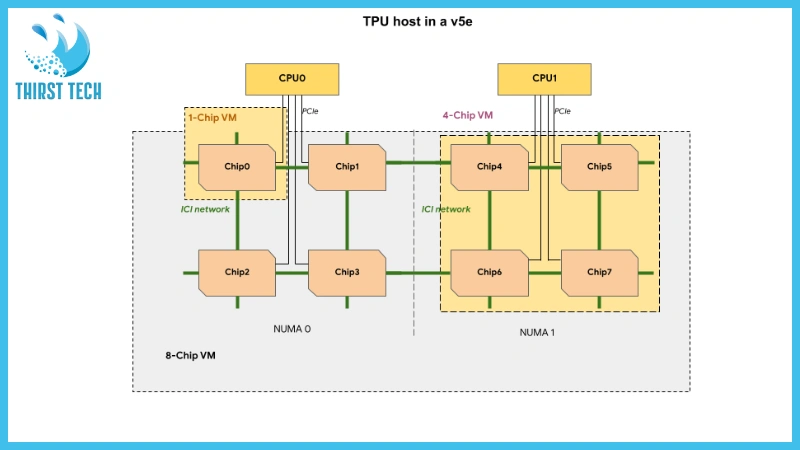

Google’s Tensor Processing Units (TPUs) exemplify purpose-built AI chips. From TPU v2 in 2017 to the current TPU v5e, each generation optimizes for matrix multiply, crucial for neural processing:

- Systolic arrays designed for batched matrix operations

- Up to 2 exaOPS INT8 performance per pod

- 600 TB/s total fabric bandwidth

I recall deploying a speech-recognition inference pipeline on TPU v4 pods and marveling at sub-10 ms latency for short audio clips. That same pipeline on a CPU farm would barely scrape real-time thresholds.

Beyond the Big Two: Specialized Accelerators

Cerebras Wafer-Scale Engine

Imagine a single chip the size of your palm, but encompassing 850,000 cores, that’s Cerebras’s wafer-scale engine. By sidestepping traditional chip-to-chip links, it eliminates inter-die bottlenecks:

- Ultra-low latency across the entire wafer

- Ideal for trillion-parameter models that thrash conventional clusters

- Built-in high-bandwidth memory stacks

In a 2024 collaboration, our team trained a custom transformer-based climate model on Cerebras in a fraction of the time and with far less orchestration than on GPU clusters.

Graphcore IPU

Graphcore’s Intelligence Processing Unit (IPU) takes a different tack with fine-grained parallelism:

- Hundreds of independent cores with local scratchpad memories

- Graph-optimized execution for sparse and dense workloads

- Flexibility to handle both training and inference

This architecture shines in scenarios where data dependencies are complex, think graph neural networks for social-network analysis or recommendation systems.

🔗 Related Post

Learn how AI Agents function in 2025, from decision-making models to automation, revolutionizing industries with smart adaptability.

Key Trends Driving AI Hardware Innovations

- Performance-per-Watt Focus

The demands of AI at scale have made energy efficiency paramount. Hopper’s mixed-precision engines and TPU’s on-chip memory hierarchies both demonstrate how reducing data movement slashes power draw and cooling costs. - Heterogeneous Computing

No single chip rules all workloads. Modern data centers orchestrate GPUs, TPUs, NPUs, and FPGAs in concert, dynamically mapping tasks, training, inference, pre- and post-processing, to the most suitable hardware. - Edge AI & NPUs

Compact neural processing units now power on-device inference for smartphones, drones, and IoT sensors. Qualcomm’s Hexagon NPUs, Apple’s Neural Engine, and Google’s Edge TPUs bring low-latency AI to the fringes of the network. - Open Ecosystems

Initiatives like the Open Compute Project and ONNX Runtime ensure that once cutting-edge hardware innovations rapidly gain software support, they lower the barrier for researchers and startups. - Custom-Designed AI Chips

ASICs tailored to specific AI domains: autonomous vehicles, genomics, and financial modeling, are proliferating. This vertical specialization drives further performance gains and energy savings.

The Human Side: Challenges & Lessons Learned

Building and deploying AI hardware isn’t just a specs race; it’s a journey rife with trade-offs:

- Software maturity: Early adopters often wrestle with immature compiler toolchains and driver quirks. I’ve debugged GPU kernel panics at 2 AM more than once.

- Integration complexity: Heterogeneous clusters require expert orchestration—Slurm configs, Kubernetes operators, and custom RPC layers to glue diverse hardware.

- Cost considerations: Cutting-edge accelerators come at a premium. Balancing CapEx and OpEx against projected speedups demands careful TCO analyses.

Yet these challenges are part of the excitement. Each snag we overcome yields insights that ripple through future hardware designs and software frameworks alike.

🔗 Related Post

Explore Algorithmic Bias in AI – its causes, real-world effects, and solutions for building fairer AI systems.

Future of AI Hardware: What’s on the Horizon?

- 3D-stacked architectures leveraging through-silicon vias to boost memory capacity and bandwidth without ballooning footprint.

- Photonic interconnects promise terabit-scale links with orders-of-magnitude lower energy per bit than copper.

- Neuromorphic chips that mimic biological synapses for ultra-efficient, event-driven inference.

As the AI hardware market matures, we’ll see greater convergence between academic prototypes and commercial offerings, ushering in an era of hardware-software co-design where algorithms inform chip layouts and vice versa.

Conclusion – AI Hardware Innovations

From the dawn of CUDA GPUs to today’s wafer-scale behemoths and edge NPUs, AI hardware innovations have been the silent workhorses behind every breakthrough in artificial intelligence. They amplify our algorithms, cut down our experiment cycles, and open new avenues, whether in cloud computing, autonomous systems, or personalized medicine. The next decade will be defined by ever-closer synergy between hardware and software, as we strive to meet the insatiable demands of generative AI, large-scale simulations, and beyond.

👉 Which AI hardware innovations do you see powering your next project? Drop a comment below, explore our deep dive on optimizing GPU clusters for AI workloads, or subscribe for monthly insights into the ever-evolving AI hardware landscape!

FAQs about AI Hardware Innovations

What is the difference between GPUs and TPUs in processing AI workloads?

GPUs are general-purpose with programmable shader cores repurposed for tensor math, whereas TPUs are ASICs giving purpose-built, architected matrix math for better performance-per-watt on large-scale training and inference.

What is the role of NPUs in the edge AI?

NPUs integrate specialized neural compute blocks directly into mobile SoCs, enabling on-device inference with minimal latency and off-cloud privacy advantages for applications like AR filters and voice assistants.

Are wafer-scale engines practical for most organizations?

Currently, wafer-scale systems like Cerebras excel in research labs and enterprises tackling trillion-parameter models. As costs decline and tooling matures, we may see broader adoption for specialized workloads.

Sources